You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel's High Peformance GPU, Intel Arc Alchemist

-

Thread started by cm2

- Credits

- 42,798

It is nice to see that Intel is improving their CPU and GPU, so they play games at a higher quality video setting.

- Credits

- 124,538

Can it even be made?

- Credits

- 42,798

Can it even be made?

It probably can be made, but I feel it maybe expensive to buy like most new tech.

I think the chip shortage caused by COVID lockdowns and restrictions may cause us to wait longer for newer and faster processors from Intel.

Read Digital Foundry Interview:

Digital Foundry: Obviously, moving into the dedicated graphics arena is a really interesting development for Intel. And it's been a long time in development, you've made no secret of your move here, but what is the overall strategy? Why are you getting into the dedicated graphics space?

Lisa Pearce: If you look at it, it's an incredible market. Many of us have been working in integrated graphics for a long time. Intel's been in the graphics business for two decades, doing lower power, constrained form factor solutions - and the market's hungry, I think, for more options in discrete graphics. So, we're super excited to go into it. It's definitely one that has big aspirations with Xe, it's a scalable architecture, not just for client, but also datacentre. It's a very interesting time, and I think the industry is looking forward to another player there.

Digital Foundry: Absolutely. There has been a convergence in terms of the needs of the markets, right? Obviously, Nvidia made a huge impact in terms of AI, ray tracing and all of this can converge with the gaming market - and it's great to see that Intel is joining the fray as well. So we've had the Alchemist GPU revealed. And I'm going to ask the question that you don't want me to as - but where do you expect it to land in terms of the overall market? What is this GPU going to do for gamers? Which is to say, that's my question which doesn't involve the word 'performance' but is about performance!

Tom Petersen: Well, I would say of course, Richard, we're not going to talk about performance, so I can't directly answer your question. But I can say that as you walk through what is Alchemist, it is a full-featured gaming GPU first, right? There can be no question in your mind. And it's not an entry level GPU, it's definitely a competitive GPU. It's got all the features that are needed for next generation gaming. So, without talking about performance, I'm pretty excited about it.

Digital Foundry: Fantastic. And it looks like you're going to be first to the market with TSMC 6nm - interesting choice there. Can you actually quantify the advantages that this process might give you over what's already out on the market and what you'll be going up against in Q1 2022?

Tom Petersen: Well, again, that's getting a little bit too close to performance. But obviously, being in the next generation process ahead of other chips gives us an advantage. And there's plenty of experts out there that can give kind of technology roadmap projections, just not this one!

Digital Foundry: Looking at the architecture, we can see there's a focus on rasterisation, of course, but ray tracing and machine learning are front and centre, which is a kind of different strategy than we saw with AMD and RDNA 2. And it's more akin to what we see from Nvidia, focusing on machine learning and dedicated ray tracing cores, accelerating things like ray traversal, for example. Intel's going with that kind of approach, how come?

Tom Petersen: Well, to me, it's just the way that technology is evolving naturally. If you think about AI as being applied literally to every vertical application in the world, for sure, it's going to have a major impact on gaming. You can actually get better results than classic results by fusing AI-style compute to traditional rendering. And I think we're just at the beginning of that technology. Today, it's mostly working on post rendered pixels. But obviously, I mean, that's not the only place that AI is going to change gaming. So, I think that's just a natural evolution.

And again, when you think about ray tracing, it delivers a better result than traditional rendering techniques. It doesn't work everywhere and obviously, there's a performance trade-off. But it's just a great technique to improve the life of gamers. So, I think that these trends are going to continue to accelerate. And I think it's fantastic that there's some alignment actually, because that alignment between hardware vendors like Intel and Nvidia and even AMD... that alignment allows the ISVs to see a stable platform of features. And that grows the overall market for games and that's really what is good for everybody.

Digital Foundry: Just from an architectural standpoint, I'm curious. Why rely on dedicated hardware, for example, for the ray tracing unit, instead of repurposing current units that the GPU may already have, and relying on just general compute, for example?

Tom Petersen: The big reason there is because the nature of what's happening when you're doing ray traversal is very different. The algorithms are very different from a traditional multi-pixel per chunk, you know, multiple things in parallel. So think of it as you're trying to find where these rays are intersecting triangles. It's a branching style function, and you just need multiple different style of units. So that's really algorithmic, the preference would be, 'hey, let's run everything on shaders' but the truth is that's inefficient - although I do know that Nvidia did make a version of that available for their older GPUs. And you remember the performance difference between the dedicated hardware and the shader? It's dramatic. And it's because the algorithm is very, very different.

Digital Foundry: A question on overall strategy: you've shown one chip design, although there was a slide with two chips. I'm assuming the plan is going to be that you'll have a stack, right? You will have different parts, there won't be just one GPU coming up. Does the DG1 silicon still have a role to play, or was that almost like a test run as such?

Lisa Pearce: Yeah, DG1 was our first step to go and work through a lot of the different issues and trying to make sure we prepped our stack was the first entry there, getting the driver tuned and ready. So, DG1 was very fundamental for us. But really, Alchemist is the start of just great graphics performance GPUs. And there'll be many following, which is the reason why we shared some of those code names. It's definitely a multi-year approach.

Digital Foundry: Let's move on to something that's actually just as fundamental to the project as the silicon, which is the software stack. This is something where it looks like it's been like an ongoing approach. over many years, I guess I first started to take notice of Intel graphics more directly with Ice Lake [10th generation Core]. And obviously, you've come on leaps and bounds since then. But what is the strategy in developing the software stack? Where do you actually want to be when you end up launching in Q1 2022. Where are you now and what are the major accomplishments today?

Lisa Pearce: Well, you know, we've been preparing for Xe HPG for some time. And a big part of that is the driver stack having an architecture that can be scalable from integrated graphics with LP to HPG. And even as you talked about some of the other architectures with HPC, as well. And so, it's fundamental in the driver design, and it started last year. Last year at Architecture Day, we talked about Monza, it was a big change to our 3D driver stack to prepare for that scaling. So that's the first fundamental, then after that, trying to have the maturity in how we tune for different segments, different performance points and really squeeze out every aspect of each unique architecture product point.

So, within the driver, we've had four main efforts going on, especially this year preparing for this launch. You know, the first three are more general than that is specific to HPG particularly So, trying to have local memory optimisations, how well do we use it? What's our memory footprint? Are we putting the right things in local memory for each title? then the game load time performance... the load time there was about 25 percent average reduction this year. Some are much heavier. The work is continuing. So, we have a lot of work to do there continuing into Q1 for launch. Third was CPU utilisation, CPU bound titles, you know, I mentioned on average, we kind of stated things conservatively and 18 percent on average, [but] some titles [have] 80 percent reduction. So that was really the maturity of the Monza stack that we rolled out and trying to squeeze the performance from that. And then the last one, of course, how well does the driver feed the HPG, the larger architecture. And so all of these are continuing. It's a constant watch and tuning as new games, new workloads, especially on DX11/DX12. And you'll see that continue through Q1 for the launch.

Digital Foundry: Okay, because perception was that when we moved into the era of the low-level APIs, the actual driver optimisation from the vendor side would kind of take a backseat to what's happening with the developer. But that hasn't happened right?

Tom Petersen: No, it doesn't work that way. I mean, the low level APIs have given a lot more freedom to ISVs and they've created a lot of really cool technologies. But at the end of the day, a heavy lift is still required by the driver, the compiler alone is just a major contributor to overall frame performances. And that's going to continue to be something that we're going to work on, for sure.

Digital Foundry: Not just looking at the latest titles and the latest APIs, does Intel have any plans to increase its performance and compatibility with legacy titles in the early DX11 to pre-DX11 era, perchance?

Lisa Pearce: A lot of [driver optimisation] is based on popularity, more than anything, so we try to make sure that top titles people are using, those are the highest priority, of course... some heavy DX11 but also DX9 titles. Also, based on geographies, it's a different makeup of the most popular titles and APIs that are used - so it is general. But of course, the priority tends to fall heaviest with some of the newer modern APIs, but we still do have even DX9 optimisations going on.

Tom Petersen: There's a whole class of things that you can do to applications. Think of it as like outside the application, things that you can do like, 'Hey, you made the compiler faster, you can make the driver faster.' But what else can you do? There are some really cool things that you can be doing graphically, even treating the game as sort of a black box. And I think of all of that stuff as implicit. It's things that are happening without game developer integration. But there's a lot more stuff that you can do when you start talking about game integration. So I feel like Intel is at that place where we're on both sides, we have some things that we're doing implicit, and a lot of things that are explicit.

Digital Foundry: Okay. In terms of implicit driver functions, for example, does Intel plan to offer more driver features when its HPG line does eventually come out? Things like half refresh rate v-sync, controllable MSAA, VRS over-shading, for example, because I know Alchemist does support hardware VRS and it can use over-shading for VR titles. Are there any sort of very specific interesting things that we should expect the HPG launch?

Tom Petersen: There are a lot of interesting features. I think of it as the goodness beyond just being a great graphics driver, right? You need to be a great performance driver, and competitive on a perf per watt and actually perf per transistor, you need to have all that. But then you also need to be pushing forward on the features beyond the basic graphic driver and you'll hear more about that as we get closer to HPG launch. So I say yes, I'm pretty confident.

Digital Foundry: Obviously, moving into the dedicated graphics arena is a really interesting development for Intel. And it's been a long time in development, you've made no secret of your move here, but what is the overall strategy? Why are you getting into the dedicated graphics space?

Lisa Pearce: If you look at it, it's an incredible market. Many of us have been working in integrated graphics for a long time. Intel's been in the graphics business for two decades, doing lower power, constrained form factor solutions - and the market's hungry, I think, for more options in discrete graphics. So, we're super excited to go into it. It's definitely one that has big aspirations with Xe, it's a scalable architecture, not just for client, but also datacentre. It's a very interesting time, and I think the industry is looking forward to another player there.

Digital Foundry: Absolutely. There has been a convergence in terms of the needs of the markets, right? Obviously, Nvidia made a huge impact in terms of AI, ray tracing and all of this can converge with the gaming market - and it's great to see that Intel is joining the fray as well. So we've had the Alchemist GPU revealed. And I'm going to ask the question that you don't want me to as - but where do you expect it to land in terms of the overall market? What is this GPU going to do for gamers? Which is to say, that's my question which doesn't involve the word 'performance' but is about performance!

Tom Petersen: Well, I would say of course, Richard, we're not going to talk about performance, so I can't directly answer your question. But I can say that as you walk through what is Alchemist, it is a full-featured gaming GPU first, right? There can be no question in your mind. And it's not an entry level GPU, it's definitely a competitive GPU. It's got all the features that are needed for next generation gaming. So, without talking about performance, I'm pretty excited about it.

Digital Foundry: Fantastic. And it looks like you're going to be first to the market with TSMC 6nm - interesting choice there. Can you actually quantify the advantages that this process might give you over what's already out on the market and what you'll be going up against in Q1 2022?

Tom Petersen: Well, again, that's getting a little bit too close to performance. But obviously, being in the next generation process ahead of other chips gives us an advantage. And there's plenty of experts out there that can give kind of technology roadmap projections, just not this one!

Digital Foundry: Looking at the architecture, we can see there's a focus on rasterisation, of course, but ray tracing and machine learning are front and centre, which is a kind of different strategy than we saw with AMD and RDNA 2. And it's more akin to what we see from Nvidia, focusing on machine learning and dedicated ray tracing cores, accelerating things like ray traversal, for example. Intel's going with that kind of approach, how come?

Tom Petersen: Well, to me, it's just the way that technology is evolving naturally. If you think about AI as being applied literally to every vertical application in the world, for sure, it's going to have a major impact on gaming. You can actually get better results than classic results by fusing AI-style compute to traditional rendering. And I think we're just at the beginning of that technology. Today, it's mostly working on post rendered pixels. But obviously, I mean, that's not the only place that AI is going to change gaming. So, I think that's just a natural evolution.

And again, when you think about ray tracing, it delivers a better result than traditional rendering techniques. It doesn't work everywhere and obviously, there's a performance trade-off. But it's just a great technique to improve the life of gamers. So, I think that these trends are going to continue to accelerate. And I think it's fantastic that there's some alignment actually, because that alignment between hardware vendors like Intel and Nvidia and even AMD... that alignment allows the ISVs to see a stable platform of features. And that grows the overall market for games and that's really what is good for everybody.

Digital Foundry: Just from an architectural standpoint, I'm curious. Why rely on dedicated hardware, for example, for the ray tracing unit, instead of repurposing current units that the GPU may already have, and relying on just general compute, for example?

Tom Petersen: The big reason there is because the nature of what's happening when you're doing ray traversal is very different. The algorithms are very different from a traditional multi-pixel per chunk, you know, multiple things in parallel. So think of it as you're trying to find where these rays are intersecting triangles. It's a branching style function, and you just need multiple different style of units. So that's really algorithmic, the preference would be, 'hey, let's run everything on shaders' but the truth is that's inefficient - although I do know that Nvidia did make a version of that available for their older GPUs. And you remember the performance difference between the dedicated hardware and the shader? It's dramatic. And it's because the algorithm is very, very different.

Digital Foundry: A question on overall strategy: you've shown one chip design, although there was a slide with two chips. I'm assuming the plan is going to be that you'll have a stack, right? You will have different parts, there won't be just one GPU coming up. Does the DG1 silicon still have a role to play, or was that almost like a test run as such?

Lisa Pearce: Yeah, DG1 was our first step to go and work through a lot of the different issues and trying to make sure we prepped our stack was the first entry there, getting the driver tuned and ready. So, DG1 was very fundamental for us. But really, Alchemist is the start of just great graphics performance GPUs. And there'll be many following, which is the reason why we shared some of those code names. It's definitely a multi-year approach.

Digital Foundry: Let's move on to something that's actually just as fundamental to the project as the silicon, which is the software stack. This is something where it looks like it's been like an ongoing approach. over many years, I guess I first started to take notice of Intel graphics more directly with Ice Lake [10th generation Core]. And obviously, you've come on leaps and bounds since then. But what is the strategy in developing the software stack? Where do you actually want to be when you end up launching in Q1 2022. Where are you now and what are the major accomplishments today?

Lisa Pearce: Well, you know, we've been preparing for Xe HPG for some time. And a big part of that is the driver stack having an architecture that can be scalable from integrated graphics with LP to HPG. And even as you talked about some of the other architectures with HPC, as well. And so, it's fundamental in the driver design, and it started last year. Last year at Architecture Day, we talked about Monza, it was a big change to our 3D driver stack to prepare for that scaling. So that's the first fundamental, then after that, trying to have the maturity in how we tune for different segments, different performance points and really squeeze out every aspect of each unique architecture product point.

So, within the driver, we've had four main efforts going on, especially this year preparing for this launch. You know, the first three are more general than that is specific to HPG particularly So, trying to have local memory optimisations, how well do we use it? What's our memory footprint? Are we putting the right things in local memory for each title? then the game load time performance... the load time there was about 25 percent average reduction this year. Some are much heavier. The work is continuing. So, we have a lot of work to do there continuing into Q1 for launch. Third was CPU utilisation, CPU bound titles, you know, I mentioned on average, we kind of stated things conservatively and 18 percent on average, [but] some titles [have] 80 percent reduction. So that was really the maturity of the Monza stack that we rolled out and trying to squeeze the performance from that. And then the last one, of course, how well does the driver feed the HPG, the larger architecture. And so all of these are continuing. It's a constant watch and tuning as new games, new workloads, especially on DX11/DX12. And you'll see that continue through Q1 for the launch.

Digital Foundry: Okay, because perception was that when we moved into the era of the low-level APIs, the actual driver optimisation from the vendor side would kind of take a backseat to what's happening with the developer. But that hasn't happened right?

Tom Petersen: No, it doesn't work that way. I mean, the low level APIs have given a lot more freedom to ISVs and they've created a lot of really cool technologies. But at the end of the day, a heavy lift is still required by the driver, the compiler alone is just a major contributor to overall frame performances. And that's going to continue to be something that we're going to work on, for sure.

Digital Foundry: Not just looking at the latest titles and the latest APIs, does Intel have any plans to increase its performance and compatibility with legacy titles in the early DX11 to pre-DX11 era, perchance?

Lisa Pearce: A lot of [driver optimisation] is based on popularity, more than anything, so we try to make sure that top titles people are using, those are the highest priority, of course... some heavy DX11 but also DX9 titles. Also, based on geographies, it's a different makeup of the most popular titles and APIs that are used - so it is general. But of course, the priority tends to fall heaviest with some of the newer modern APIs, but we still do have even DX9 optimisations going on.

Tom Petersen: There's a whole class of things that you can do to applications. Think of it as like outside the application, things that you can do like, 'Hey, you made the compiler faster, you can make the driver faster.' But what else can you do? There are some really cool things that you can be doing graphically, even treating the game as sort of a black box. And I think of all of that stuff as implicit. It's things that are happening without game developer integration. But there's a lot more stuff that you can do when you start talking about game integration. So I feel like Intel is at that place where we're on both sides, we have some things that we're doing implicit, and a lot of things that are explicit.

Digital Foundry: Okay. In terms of implicit driver functions, for example, does Intel plan to offer more driver features when its HPG line does eventually come out? Things like half refresh rate v-sync, controllable MSAA, VRS over-shading, for example, because I know Alchemist does support hardware VRS and it can use over-shading for VR titles. Are there any sort of very specific interesting things that we should expect the HPG launch?

Tom Petersen: There are a lot of interesting features. I think of it as the goodness beyond just being a great graphics driver, right? You need to be a great performance driver, and competitive on a perf per watt and actually perf per transistor, you need to have all that. But then you also need to be pushing forward on the features beyond the basic graphic driver and you'll hear more about that as we get closer to HPG launch. So I say yes, I'm pretty confident.

- Credits

- 124,538

It probably can be made, but I feel it maybe expensive to buy like most new tech.

I think the chip shortage caused by COVID lockdowns and restrictions may cause us to wait longer for newer and faster processors from Intel.

Current delays are forecast to last until 2023.

- Credits

- 42,798

Current delays are forecast to last until 2023.

Hopefully, the delays don't last longer than 2023 because of newer Coronavirus varients. I saw on the news that there are two new Coronavirus variants which are called Delta and Mu which are more infectious than the previous Covid19 Coronavirus from China.

Read from Tom's hardware, Intel's high peformance GPU, Arc Alchemist is beaten by Nvidia's RTX 3070:

The flagship Intel Arc Alchemist graphics card hits 2.4 GHz in a new OpenCL benchmark. Nonetheless, the DG2 graphics card was still no match for Nvidia's GeForce RTX 3070 (Ampere), one of the best graphics cards on the market.

Barring any setbacks, Intel's DG2 family of discrete desktop graphics cards will arrive in the second quarter of the year. The flagship Arc Alchemist SKU will wield up to 512 execution units (EUs) and up to 16GB of GDDR6 memory. The graphics card has scaled up to 2.4 GHz in the new Geekbench 5 submission (via Benchleaks), likely the graphics card's boost clock. But, of course, it's an engineering sample, so the clock speed isn't final.

If we look at the performance on paper, the flagship Arc Alchemist should deliver around 20 TFLOPs of FP32 performance. Of course, it's not the best metric for gaming, but it's the only information available. Based on the FP32 performance, the DG2-512 theoretically performs similar to the often referenced GeForce RTX 3070. The AMD equivalent would be Radeon RX 6700 XT (Big Navi).

The flagship Intel Arc Alchemist graphics card hits 2.4 GHz in a new OpenCL benchmark. Nonetheless, the DG2 graphics card was still no match for Nvidia's GeForce RTX 3070 (Ampere), one of the best graphics cards on the market.

Barring any setbacks, Intel's DG2 family of discrete desktop graphics cards will arrive in the second quarter of the year. The flagship Arc Alchemist SKU will wield up to 512 execution units (EUs) and up to 16GB of GDDR6 memory. The graphics card has scaled up to 2.4 GHz in the new Geekbench 5 submission (via Benchleaks), likely the graphics card's boost clock. But, of course, it's an engineering sample, so the clock speed isn't final.

If we look at the performance on paper, the flagship Arc Alchemist should deliver around 20 TFLOPs of FP32 performance. Of course, it's not the best metric for gaming, but it's the only information available. Based on the FP32 performance, the DG2-512 theoretically performs similar to the often referenced GeForce RTX 3070. The AMD equivalent would be Radeon RX 6700 XT (Big Navi).

- Credits

- 124,538

So what magic does it do?

not good enough from the leaks lolSo what magic does it do?

Read this from Techradar:

There are already genuine concerns around Intel’s Arc desktop GPUs, and particularly reports regarding the graphics driver, which seemingly can be wonky in some cases (with certain games suffering from serious woes or not even running at all). This is the main theory as to the reason for the delay of Arc desktop, and the way things have happened with a limited launch in China just to test the waters, with the software simply not being ready for a global rollout yet.

Obviously enough, when it comes to those Arc A380 benchmarks, we must also remember that Intel will have cherry-picked games to present the GPU in its best light. And we had concerns of our own that the graphics card is being compared to the RX 6400 in the first place – not a well-liked product, and a weak target to be taking pot-shots at in that respect.

While it does rather feel like everyone is piling on here, and Intel Arc desktop might be receiving an unfair early savaging, there is still hope in some respects. Pricing will still be key – and we’ll have to see what the price tag of the A380 is in markets like the US when it finally goes global – and those graphics drivers will get better over time.

But it sounds worryingly like there’s still a fair way to go with nailing the driver issues, and time isn’t a luxury Intel has when you consider that the next-gen GPUs from AMD and Nvidia are closing fast now, and maybe only a few months or so away. At which point Intel’s Arc flagship will be even further down the relative performance rankings when compared to RTX 4000 GPUs, rather than current-gen Nvidia RTX 3000 graphics cards, the same being true for AMD’s RDNA 3, of course.

There are already genuine concerns around Intel’s Arc desktop GPUs, and particularly reports regarding the graphics driver, which seemingly can be wonky in some cases (with certain games suffering from serious woes or not even running at all). This is the main theory as to the reason for the delay of Arc desktop, and the way things have happened with a limited launch in China just to test the waters, with the software simply not being ready for a global rollout yet.

Obviously enough, when it comes to those Arc A380 benchmarks, we must also remember that Intel will have cherry-picked games to present the GPU in its best light. And we had concerns of our own that the graphics card is being compared to the RX 6400 in the first place – not a well-liked product, and a weak target to be taking pot-shots at in that respect.

While it does rather feel like everyone is piling on here, and Intel Arc desktop might be receiving an unfair early savaging, there is still hope in some respects. Pricing will still be key – and we’ll have to see what the price tag of the A380 is in markets like the US when it finally goes global – and those graphics drivers will get better over time.

But it sounds worryingly like there’s still a fair way to go with nailing the driver issues, and time isn’t a luxury Intel has when you consider that the next-gen GPUs from AMD and Nvidia are closing fast now, and maybe only a few months or so away. At which point Intel’s Arc flagship will be even further down the relative performance rankings when compared to RTX 4000 GPUs, rather than current-gen Nvidia RTX 3000 graphics cards, the same being true for AMD’s RDNA 3, of course.

- Credits

- 124,538

Read this from Techradar:

There are already genuine concerns around Intel’s Arc desktop GPUs, and particularly reports regarding the graphics driver, which seemingly can be wonky in some cases (with certain games suffering from serious woes or not even running at all). This is the main theory as to the reason for the delay of Arc desktop, and the way things have happened with a limited launch in China just to test the waters, with the software simply not being ready for a global rollout yet.

Obviously enough, when it comes to those Arc A380 benchmarks, we must also remember that Intel will have cherry-picked games to present the GPU in its best light. And we had concerns of our own that the graphics card is being compared to the RX 6400 in the first place – not a well-liked product, and a weak target to be taking pot-shots at in that respect.

While it does rather feel like everyone is piling on here, and Intel Arc desktop might be receiving an unfair early savaging, there is still hope in some respects. Pricing will still be key – and we’ll have to see what the price tag of the A380 is in markets like the US when it finally goes global – and those graphics drivers will get better over time.

But it sounds worryingly like there’s still a fair way to go with nailing the driver issues, and time isn’t a luxury Intel has when you consider that the next-gen GPUs from AMD and Nvidia are closing fast now, and maybe only a few months or so away. At which point Intel’s Arc flagship will be even further down the relative performance rankings when compared to RTX 4000 GPUs, rather than current-gen Nvidia RTX 3000 graphics cards, the same being true for AMD’s RDNA 3, of course.

Sounds like something to avoid.

Indeed. They need to fix that asap or they will have no chance against Nvidia and amd, with them being a new player in GPU cards.Sounds like something to avoid.

- Credits

- 42,798

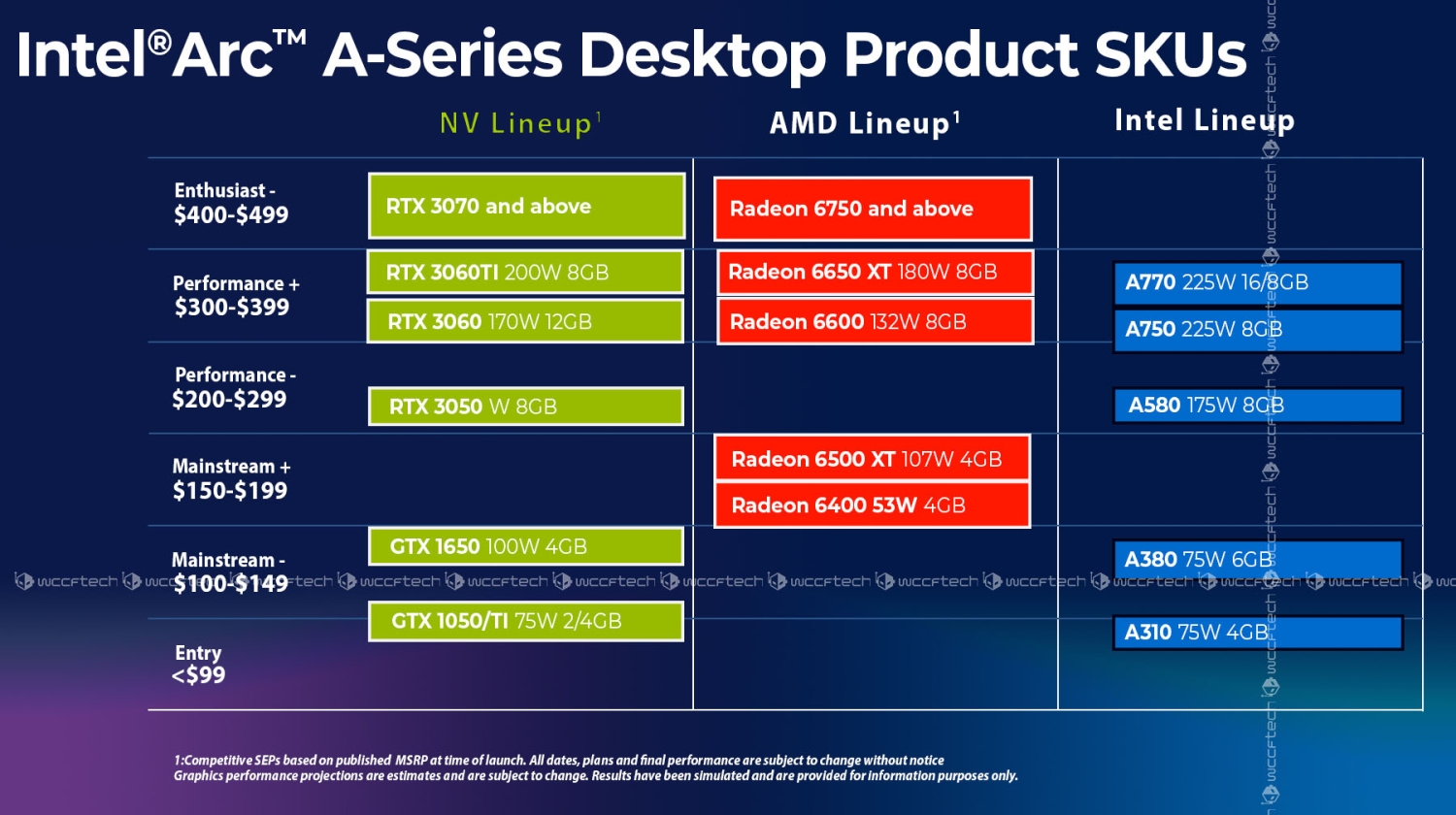

So the best version of Intel's arc GPU will be around $300

I feel $300 is an expensive price for a video card.

Read on the Ray Tracing Performance of the card from tweaktown:

Intel's Ryan Shrout and Tom Peterson spoke with PCGamer's Senior Hardware Editor, Jacob Ridley, where they talked about a fair amount but when it came to ray tracing we've got some confident new comments from the company. The Ray Tracing Unit (RTU) on Arc GPUs is capable of delivering "real" ray tracing performance notes Tom Peterson, with the Arc A770 and Arc A750 battling the RTX 3060 and keeping up or beating it.

Tom continued, saying that the ray tracing performance Intel offers with its Arc GPUs is competitive or better than NVIDIA's ray tracing hardware. Tom said: "The RTU [ray tracing unit] that we have is particularly well suited for delivering real ray tracing performance. And you'll see that when you do ray tracing on comparisons with an [RTX] 3060 versus A750 or A770, we should fare very, very well. Yeah, we're definitely competitive or better than NVIDIA with ray tracing hardware".

Peterson continued: "We tried to make ours generic because we know that we're not the established GPU vendor, right. So all of our technology pretty much has to work with low dev rel (developer relations) or dev tech engagement. And so things like our cache structure and our hierarchy, you know, our thread sorting unit, which are the two techs that we're going to talk about in this video, they work without any dev rel or dev tech work".

"I'm kind of torn on this one. Because to your point, there's some things that you would normally expect to lag. And the reason you would expect them to lag is because they're hard, and they need to come after you have a solid base. But for better or worse, we just said we need all these things. And so we did XeSS, we did RT, we did AV1, we kind of have a lot on the plate, right? I think we've learned that maybe, you know, in this case, we have a lot on the plate and we're gonna land all the planes, and that's taken us longer than we would have expected".

Intel's Ryan Shrout and Tom Peterson spoke with PCGamer's Senior Hardware Editor, Jacob Ridley, where they talked about a fair amount but when it came to ray tracing we've got some confident new comments from the company. The Ray Tracing Unit (RTU) on Arc GPUs is capable of delivering "real" ray tracing performance notes Tom Peterson, with the Arc A770 and Arc A750 battling the RTX 3060 and keeping up or beating it.

Tom continued, saying that the ray tracing performance Intel offers with its Arc GPUs is competitive or better than NVIDIA's ray tracing hardware. Tom said: "The RTU [ray tracing unit] that we have is particularly well suited for delivering real ray tracing performance. And you'll see that when you do ray tracing on comparisons with an [RTX] 3060 versus A750 or A770, we should fare very, very well. Yeah, we're definitely competitive or better than NVIDIA with ray tracing hardware".

Peterson continued: "We tried to make ours generic because we know that we're not the established GPU vendor, right. So all of our technology pretty much has to work with low dev rel (developer relations) or dev tech engagement. And so things like our cache structure and our hierarchy, you know, our thread sorting unit, which are the two techs that we're going to talk about in this video, they work without any dev rel or dev tech work".

"I'm kind of torn on this one. Because to your point, there's some things that you would normally expect to lag. And the reason you would expect them to lag is because they're hard, and they need to come after you have a solid base. But for better or worse, we just said we need all these things. And so we did XeSS, we did RT, we did AV1, we kind of have a lot on the plate, right? I think we've learned that maybe, you know, in this case, we have a lot on the plate and we're gonna land all the planes, and that's taken us longer than we would have expected".

Asus and MSI, and probably the rest soon to follow, though it's for their "premade" branded PC, so far, read from tweaktown:did they say if the ARC GPUs would have 3rd party models from the likes of ASUS and EVGA or is it going to be made and sold by intel only?

Intel's new Arc A-series desktop GPUs launched without even a whimper, with the new GPUs being an exclusive to China for many months... but it appears AIB partners are now getting some of that Arc silicon to make entry-level graphics cards for some upcoming pre-built PCs.

GUNNIR started it all with their custom Arc A380 Photon OC 6G graphics card, then ASRock, and now we have ASUS and MSI leaping into the game. The Intel Arc A380 cards from ASUS and MSI comes from leaker "momomo_us" on Twitter, where MSI lists its new PC with an Intel Core i5-12400 or Core i5-12400F for the lower-end SKU, while the higher-end system has an Intel Core i7-12700 or Core i7-12700F processor.

These systems can be configured with 3 different GPUs: a custom MSI GeForce GTX 1650, an MSI GeForce GT 1030, or Intel DG2 A380 or Intel DG2 A310 graphics card. We should expect to see 4GB of VRAM on each of the Arc A380 and Arc A310 graphics cards, but there's no details on that with MSI's upcoming PC.